Deepfake evolution: Semantic Fields and Discursive Genres (2017-2021)

Jacob Bañuelos Capistrán

Deepfake evolution: Semantic Fields and Discursive Genres (2017-2021)

ICONO 14, Revista de comunicación y tecnologías emergentes, vol. 20, no. 1, 2022

Asociación científica ICONO 14

Evolución del Deepfake: campos semánticos y géneros discursivos (2017-2021)

Evolução do Deepfake: campos semânticos e gêneros discursivos (2017-2021)

Jacob Bañuelos Capistrán  jacobisraell@gmail.com

jacobisraell@gmail.com

Media and Digital Culture, Tecnológico de Monterrey, México

Translation to English: Gerardo Piña

Received: 01 july 2021

Revised: 06 august 2021

Accepted: 17 february 2022

Published: 03 june 2022

Abstract: The aim of this study is to analyse the evolution of the semantic fields and discursive genres of deepfake from its appearance in 2017 until 2021. The research is based on a literature review of specialised popular, informative, theoretical and scientific articles on deepfake, based on a longitudinal analytical-synthetic methodology in three phases between 2017-2021, where the narrative assessment of the SANRA Scale is applied. The study identifies the dominant semantic fields and discursive genres of deepfake, as well as the evolution of its beneficial or criminal uses. The study reveals a progressive discursive evolution where new semantic fields and discursive genres of deepfake emerge, with a tendency towards beneficial and not only criminal uses in the sphere of the audiovisual industry, activism, experimental art, commercial, medical, advertising, propaganda and education, among others.

Keywords: deepfake; semantic fields; discursive genres; communication; evolution.

Resumen: El presente estudio tiene como finalidad realizar un análisis de la evolución de los campos semánticos y géneros discursivos del deepfake desde su aparición en 2017 y hasta 2021. La investigación tiene como fundamento una revisión bibliográfica de artículos especializados de divulgación, informativos, teóricos y científicos sobre deepfake, con base en una metodología longitudinal analítica-sintética en tres fases entre 2017-2021, en donde se aplica la valoración narrativa de la Escala SANRA. El estudio identifica los campos semánticos y géneros discursivos dominantes del deepfake, así como la evolución de su usos benéficos y delictivos. El estudio revela una progresiva evolución discursiva en donde emergen nuevos campos semánticos y géneros discursivos del deepfake, con una tendencia hacia usos benéficos y no sólo delictivos en la esfera de la industria audiovisual, el activismo, el arte experimental, los usos comerciales, médicos, la publicidad, la propaganda y la educación, entre otros.

Palabras clave: Deepfake; campos semánticos; géneros discursivos; comunicación; evolución.

Resumo: O presente estudo tem como objetivo realizar uma análise da evolução dos campos semânticos e gêneros discursivos do deepfake desde o seu surgimento em 2017 e até 2021. A pesquisa se baseia em uma revisão bibliográfica de artigos de divulgação especializada, informativos, teóricos e científicos sobre deepfake, com base numa metodologia analítico-sintética longitudinal em três fases entre 2017-2021, onde é aplicada a avaliação narrativa da Escala SANRA. O estudo identifica os campos semânticos dominantes e os gêneros discursivos do deepfake, bem como a evolução de seus usos benéficos e criminosos. O estudo revela uma evolução discursiva progressiva em que novos campos semânticos e gêneros discursivos de deepfake emergem, com tendência a usos benéficos e não apenas criminosos na esfera da indústria audiovisual, ativismo, arte experimental, usos comerciais, médicos, publicitários, propaganda e educação, entre outros.

Palavras-chave: Deepfake; campos semânticos; gêneros do discurso; comunicação; evolução.

1. Introduction

This study aims to analyse the evolution of deepfake semantic fields and discursive genres since its emergence in 2017 and up to 2021. The scenario of deepfake semantic fields and discursive genres has been evolving rapidly and dynamically since its first appearance in 2017 on Reddit (Ajder et al., 2019). It is an emerging media species, impacting on a larger scene, shaped by the industry and consumption of audiovisual culture.

Deepfake feeds on the technological evolution of image and sound production through artificial intelligence (AI), content published on social networks and digital platforms, large databases (big data), collective audiovisual memory and the socio-media architecture of post-truth.

The evolution of deepfake presents a discursive expansion and dispersion where we find semantic fields, discursive genres, and emerging technologies in which both beneficial and criminal content is produced. The beneficial forms of expression of deepfake bring new forms of audiovisual expression that contribute to educational development, medicine and health, science, commerce, fashion, e-commerce, advertising, security, entertainment, social, political, artistic, and cultural reflection.

The study is based on techno-aesthetic theory (Simondon, 2013), semantic fields (Lewandowski, 1982), discourse theory (Van Dijk, 1989) and post-truth theory (Ball, 2017; Ibañez, 2017; Amorós, 2018; McIntyre, 2018; Kalpokas, 2019; Cosentino, 2020), from which we analyse the semantic fields of deepfake and emerging discursive genres that compose, as a whole, an expanded industrial and cultural scenario of deepfake.

Technically, deepfakes are a product of AI and deep learning techniques applied to train neural networks. These deep neural networks can generate and manipulate videos, images and audio artificially. Through this system it is possible to automatically create hyper-realistic fake content, which can appear realistic and be interpreted as true or real (Kietzmann et al., 2020, p. 136).

Through a deepfake it is virtually impossible to know whether a person’s image or voice is fake or real, or whether the authenticity of a photograph, sound or audiovisual document is under suspicion of having been manipulated or artificially created (Kietzmann et al., 2020). A deepfake is a product that combines, overlays, merges or replaces various types of content to produce synthetic media with AI and deep learning, which overshadows the notion of authenticity (Maras and Alexandrou, 2018; Nguyen, 2019).

Deepfake, understood as a technical-cultural object, is analysed from Simondon’s techno-aesthetic theory (2013). A technical object is made up of an “inner layer” (technical, aesthetic and cultural), an “intermediate layer” (technological mediation) and an “outer layer” (cultural manifestations, uses and practices) (Simondon, 2013). In this study we focus on the exploration and analysis of the “outer layer” of deepfake as a technical and cultural object, on the description of semantic fields, discursive genres and emerging technological applications, as well as the most common beneficial and criminal uses.

The technological, social, political, communicational, aesthetic, industrial and legal knowledge, conceptualisation and practice of deepfakes has given rise to the development of diverse semantic fields (Lewandowski, 1982, p. 46), which articulate specialised terminology, reading pacts, codification and interpretative meaning from diverse conceptual territories, motivations and forms of operation.

Semantic fields are understood as groups forming units of meaning and consisting of sets of words with interrelated objective contents (Ipsen, 1924; Lewandowski, 1982, p. 47). As a set of formal and meaningful relations entailed by a group of words, and as a set of coexisting facts which are interpreted as dependent on each other (Lewandowski, 1982, p. 44-46). A semantic field is the set of terms whose meaning refers to a common concept (Martínez, 2003).

Discursively, a deepfake is a sound, visual or audiovisual product that simulates the formal characteristics of some previous genre of expression, which takes over the keys of its content treatment to make it appear authentic, with an attribution of meaning, being artificially constructed or fake.

A discursive genre is understood as a specific form of language use, text, sound, image, a complete communicative event in a given social situation. The meaning of discourse, of a deepfake in this case, is a cognitive structure. It needs to include observable verbal and non-verbal elements, social interactions and speech acts, and in addition the cognitive representations and strategies involved during the production or comprehension of the discourse (Van Dijk, 1989).

The discursive genres of deepfake exist in society as a form of social practice and produce interactions between individuals and social groups. According to Van Dijk (1993), discourse studies, in this case deepfake, should be deepened by explaining which attributes of sound, visual and audio-visual text determine which attributes of social, political and cultural structures, and vice versa, according to Van Dijk (cited in Meersohn, 2021).

The digital life of the deepfake takes place in a socio-media scenario marked by the phenomenon of post-truth (Ball, 2017; Ibañez, 2017; D’Ancona, 2018), fertile ground for the proliferation of discourses of simulation and falsification, as well as for a critical questioning of discourses of make-believe, such as journalism, science, religion, politics and information systems.

2. Method

The study consists of three consecutive diachronic research phases (between 1 and 15 June 2021) for the period 2017-2021.

-

1) A bibliographic exploration under a longitudinal analytical-synthetic methodology (Rodríguez Jímenez and Pérez-Jacinto, 2017) using Google Search, which allowed us to explore the origins, cases and definitions of deepfake, through journalistic articles, reports and specialised texts.

-

2) An exploration following the bibliographic research methodology, described by Codina (2018), Piasecki et al. (2018) and De-Granda-Orive et al. (2013), using search engines for academic and scientific texts.

-

3) An exploration of app development and the technological processes of image and sound manipulation, especially focusing on apps for mobile devices, as part of the evolution of shallowfakes (or cheapfakes). In total, 65 publications were reviewed, analysed and assessed using the SANRA Scale for the Assessment of Narrative Review Articles (Baethge et al. , 2019).

The average evaluation of the argumentative and narrative quality of the articles analysed using the SANRA scale was 10.3. The maximum evaluation on this scale is 12, so the argumentative and narrative quality of the articles is high according to this scale. Also, the cataloguing carried out on the semantic fields and discursive genres of deepfake, recovers the evaluation by means of the SANRA scale, which contributed to give greater clarity and foundation, in addition to the searches made in the scientific databases cited. The 65 publications reviewed and analysed are cited in this text (Bañuelos, 2022).

The search for the term “deepfake” in all databases was performed on all search fields (title, topic, keywords, abstract, etc.). The search in the Web of Science Core Collection database did not include the databases of Medline, KCI-Korean Journal Database, Russian Science Citation Index, SciELO Citation Index. The words “deepfake” and “discourse” were searched in all fields (title, topic, abstract, etc.).

The exploration has been oriented towards information related to Social Sciences, Communication, Legislation, Ethics, Entertainment and Communication. In addition, the keywords “deepfake”, “deepfake” and “discourse”, “deepfake” and “augmented reality”, “deepfake” and “ethics”, “deepfake” and “apps”, “deepfake” and “porn” were used. Searches and articles in both English and Spanish were also included. Thus, once an article was found, a similar search was conducted to find more articles on a particular source, in the search related to news, business, media, misinformation, entertainment or technology.

For the longitudinal analytical-synthetic search we consulted popular articles, reports and journals such as MIT Technology Review, Deeptrace (Ajder et al., 2019, Ajder et al., 2020), Motherboard (Vice), The New York Times, Washington Post, The Guardian, The Economist, The Times, and the BBC. For the exploration of academic and scientific articles, Scopus, Web of Science, Taylor and Francis Journals, Google Scholar, Academy and Research Gate databases were used; and for the cross-sectional search of deepfake applications and technological developments, specialised sources such as Nguyen et al. (2019); Chen et al. (2020); Paris and Donovan, (2019), Westerlund (2019) and Gómez-de-Ágreda et al. (2021) were consulted.

3. Results

Deepfake semantic fields

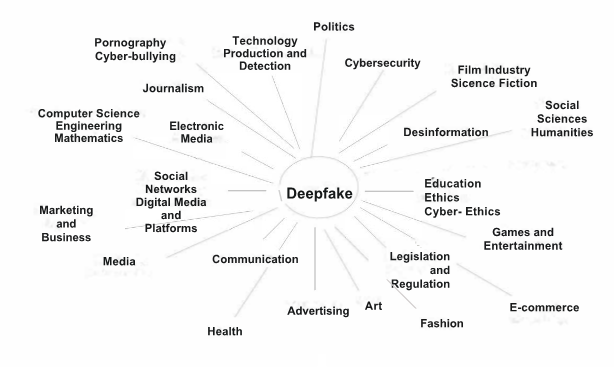

The semantic fields of deepfake have strengthened and expanded. The bibliographic analysis carried out in the search for the word deepfake in scientific databases shows that the most relevant and strongest semantic fields belong to Computer Science, Engineering and Mathematics. However, semantic fields related to Social Sciences and Humanities, although in the background, have progressively increased in areas such as law, ethics, communication, disinformation, pornography, advertising and security (See figure 1).

The case of Scopus is significant, where 603 articles on deepfake appear between 2018 and 2021, of which 447 are related to the field of computer science, 224 to engineering, 166 to social sciences, 74 to mathematics, 68 to decision sciences, 39 to arts and humanities, 36 to scientific material, 31 to physics and astronomy, 29 to psychology and 24 to business, management and accounting. As can be seen, the field of deepfake research is much more developed in the area of computer science and engineering than in social sciences and humanities.1

Similarly, it should be noted that for the search “deepfake” and “discourse” in the Scopus database only 48 documents published between 2018 and 2021 appear, of which 37 are related to social sciences, 13 to computer sciences, 12 to arts and humanities, 5 to psychology and 4 to business. The articles related to social sciences deal with various topics, regulation, sexual offences, discrimination against women, pornography, politics, disinformation, social regulation, social construction of technology, security, cyber-ethics, racism, advertising, and science fiction.

In the areas of social sciences and humanities, the fields of communication, disinformation, journalism, electronic media, digital media, socio-digital platforms and networks, film industry, science fiction, education, art, games and entertainment, legislation and social regulation, ethics, cyber-ethics, pornography, cyber-bullying, politics, advertising, marketing, business, e-commerce and fashion stand out. And in the field of health, psychology and scientific material are highlighted.

In the semantic fields pioneered by deepfake, a criminal industry has developed dedicated to the production of disinformation practices, pornography, fake news and its detection (Fraga-Lamas et al., 2020; Güera and Delp, 2018; Sohrawardi et al., 2019; Maras and Alexandrou, 2018; Cerdán et al, 2020; Xu et al., 2021; Adriani, 2019; Cooke, 2018), bots, forgery, extortion, impersonation, defamation, harassment and cyberterrorism (Gosse and Burkell, 2020; Westerlund, 2019; Vaccari and Chadwick, 2020; Gómez-de-Ágreda et al., 2021; Temir, 2020, Ajder et al., 2020; Barnes and Barraclough, 2019).

Simultaneously, semantic fields of deepfake are emerging, shaping a powerful audiovisual industry that encompasses technological development in production, detection, cybersecurity, marketing of specialised software and mobile applications. Coupled with a vigorous entertainment industry, through film production, video games, social media applications, business development, marketing, tourism, smart assistants, healthcare applications, and science (Westerlund, 2019).

Figure 1

Semantic Fields of Deepfake 2017-2021

Own elaboration, 2021

Discursive genres of deepfake

As we have noted, discursive genres acquire their own characteristics in some thematic or formal field, and are shared forms of expression that produce interactions between individuals and social groups, according to Van Dijk (quoted in Meersohn, 2021). The discursive genres reviewed in this study are considered as the “outer layer” (Simondon, 2013) of deepfake,as manifestations, in cultural uses and practices of a technical-aesthetic and cultural object, which are experienced in dynamic, progressive, expansive, and changing digital scenarios.

The cataloguing of discursive genres and the map of deepfake discursive genres 2017-2021 (figure 2) that we carried out here is based on the documentary research previously described in the methodology and accounts for the most significant cases. The criteria of relevance applied for the selection of the cases of deepfake discursive genres included in this cataloguing are: historical importance, appearance in various publications, originality and diversification towards new non-criminal applications.

It is observed that the most relevant discursive genres, appearing from the origin of deepfake between 2017 and 2019 are non-consensual pornography, porn-extortion, denunciation, virtual perfomances, living portraits, social campaigns, entertainment parodies, anti-propaganda, political statements, political satire, and art (Westerlund, 2019; Campbell et al, 2021; Kwok and Koh, 2020; Whittaker et al., 2021; Gómez-de-Ágreda et al., 2021; Initiative, 2020).

Between 2019-2021 we see a significant emergence in beneficial deepfake discursive genres such as sports broadcasts (AI-automated video), entertainment, education, talking head models, portraiture (deepnostalgia), social campaigns, social and political activism, health and medical uses, tourism, advertising, propaganda, fashion (virtual showroom, Amazon Syle Snap, Google lens), e-commerce .e-commerce, retail apps), intelligent assistants, cinema and video streaming, video games, telepresence, AI models (Generated Photos), digital humans, video conferencing, telepresence, multi-player games, memes, artistic experimentation, whistleblowing, virtual chat, chat bots, personal avatars, psychological therapy and scientific material; and on criminal uses such as: fake news, disinformation, pornography, cyberbullying, deepfake bots .deepbots) (Westerlund, 2019; Campbell et al., 2021; Kwok and Koh, 2020; Whittaker et al., 2021; Garimella and Eckles, 2020; Oliveira-Teixeira et al., 2021; Gómez-de-Ágreda et al., 2021).

As figure 2 shows, discourse genres have undergone a relevant expansion and diversification between 2012-2021, and a greater proliferation of discourse genres with beneficial uses is observed from 2019 onwards.

Some genres are transversal in time and evolve along with deepfake techniques, such as pornography, which, in addition to being an initial discursive genre, has also become a broad semantic field in which legal, ethical and regulatory issues are debated and, above all, how pornographic deepfakehas mainly affected women in the world (Martínez et al., 2019; Cole, 2019). An example of this discursive, technological and strategic evolution of pornographic deepfakeis the case of Telegram chatbots (Ajder et al., 2020).

Fig. 2.

Map of deepfake discourse genres, 2017- 2021.

Own elaboration, 2021.

Between 2012-2016 we find incipient and foundational deepfake techniques such as Deep neural network (2012), Deep Learning GAN’s (2014), Deep Learning (2014) and Face2Face (2016). By 2016 we find a first incipient genre of political satire in Presidential Avengers: Uncivil War (Parkinson, 2016), produced through Face Swapping ( Paris and Donovan, 2019, p. 11).

In 2017, the expansion of deepfake began, thanks to the user “deepfakes” from r/deepfakes on Reddit, in which he shared pornographic videos where he inserted faces of famous actresses into the bodies of porn actresses. Similarly, he shared open-source code for deep learning in popular libraries and deepnudes began to proliferate illegally on various sites. This led to an explosion of porn sites specialising in deepnudes or nudefakes, and the emergence of a genre called celeb-porn on sites such as PornHub (where porn deepfakes were banned in 2018) and Mr. Deepfakes (where they continue to be published).

The evolution of deepfake porn goes to 2021 with Telegram chatbots or deepbots channels that “undress” a woman from a photo, following in the footsteps of the DeepNude app, closed in 2019. A practice that some authors have called Automate abuse image and that affected more than 100,000 women in 2020 (Ajder et al., 2020; Hao, 2020).

In 2018, a case of porn-extortion was observed against journalist Rana Ayyub (Ayyub, R., 2018a; Ayyub, R., 2018b), harassed through a deepfake porn video, in order to silence her for a political denunciation, victim of a disinformation and smear campaign on digital networks. The same year, Jordan Peel published You Won’t Believe What Obama Says in This Video! (Silverman, 2018) on BuzzFeed, initiates the discursive genre of denunciation in which he warns of the risks of disinformation that deepfakes can generate (Silverman, 2018). Peel’s piece picks up on the original idea of a pioneer of learning lip sync from audio in Synthezising Obama (2017) via Face2Face (Suwajanakorn et al., 2017a and 2017b) (Ajder et al., 2019).

The end-of-year message of Gabonese President Ali Bongo Ondimba stands out in 2018 (Gabon 24, 2018). A speech that belongs to the genre of political propaganda and also served as counter-propaganda. The video was interpreted as a deepfake by opposition forces detonating a coup d’état in the belief of the president’s demise. The opposition could not prove that it was a deepfake, and the president appeared days later at a public event denying the falsity of the video.

In 2019, a slight expansion of deepfake discursive genres in art-related expressions (video performances IA Art) is already noticeable in the works of Klingemann (Sotheby’s, 2019) who builds a Twitter gallery with the hashtag #BigGAN. It initiates the production of living portraits or talking heads models using convolutional neural network technology powered by Samsung (Zakharov et al., 2019; Nield, 2020), a genre that expands in the living portraits of Deepnostalgia from the MyHeritage app (2021).

Entertainment is strengthened through parody as a discursive genre, as is the case of the work done by Ctrl Shift Face, The Fakening, FaceToFake on YouTube, who produce deepfakes by fusing actors’ faces in scenes taken from films and television programmes, as in Bill Hader channels Tom Cruise (Ctrl Shift Face, 2019), where Hader’s face is intermittently exchanged for Tom Cruise’s during an interview; or Al Pacino and Schwarzenegger (2019). Ctrl Shift Face does experimental work very similar to that done by Derpfakes, Shamook or Jarkan on YouTube, who challenge the techniques used by the laborious visual effects (CGI) production of Light and Magic (Disney-StarWars), in the recreation of Princess Leia (Carrie Fisher) (Derpfakes, 2018) and Grand Moff Tarkin (Peter Cushing) in Rouge One: A Star Wars Story (2016), among other examples, such as De-aging Robert Deniro in The Irishman (Shamook, 2020).

The discursive genres of entertainment traverse and merge practices of satire and parody in viral deepfake memes such as Obama sings Baka Mitai (Mechanical, 2020), Avengers (Moe Ment, 2020) and the extensive series associated with this song (Hao, 2020); or in video games where the user can be inserted as an avatar in real time, as proposed by the Unreal Engine platform (2021) (Initiative, 2020); or with facial re-enactment, speech synthesis, multi-player games, and performance capture techniques (Gardiner, 2019; Zakharov et al., 2019; Jesso et al., 2020; Nield, 2020). Also, other deepfake entertainment genres emerge, such as the cabaret show created by The Zizi Show (2020); and sports broadcasts made with artificial intelligence systems such as Pixellot.tv (2021).

In the film fiction genre, Disney and other film studios are investigating deep learning AI .High-resolution Neural Face Swapping for Visual Effects) techniques (Naruniec et al., 2020) for character creation, in the style of The Irishman (Scorsese, 2019) still produced with visual effects (VFX) and computer-generated graphics (CGI) that obtained questionable results.

The emergence of genres of denunciation and art since 2019 appears in projects such as Bill Posters’ project on Instagram called Spectre (Bill_posters_uk, 2019), where he constructs videos of political denunciation with celebrities and politicians such as Mark Zuckerberg or Donald Trump to warn about the risks of disinformation.

By 2021, dubbing and translation techniques have evolved in the film field with deepfake. One example is Flawless, a programme using the Neural Style-Preserving Visual Dubbing technique, which allows dubbing in different languages without losing the original features of the actors (Hyeongwoo et al., 2019; Vincent, 2021).

Between 2020-2021, the trend is towards a further expansion of discursive genres with beneficial uses. The uses of deepfake in genres such as political activism, social campaigns and denunciation have amplified in this period. As an example, the campaigns starring David Beckham to fight malaria in which he speaks nine languages or declares the end of the disease at the age of 70 (Malaria Most Die, 2019 and 2021). The genre of political activism can be found in the deepfakein which Mexican journalist Javier Valdez, murdered in 2017, denounces in 2020 the violence and disappearance of journalists and demands justice (Propuesta Cívica, 2020).

An extensive semantic field is that of disinformation, to which discursive genres of deepfake are added through fake news. Cases such as the fake videos made with Presidents Trump and Obama issuing false statements related to the Davos 2020 summit (CBS, 2020); and the statements by Presidents Kim and Putin (Greene, 2021) in the series Dictators (RepresentUs, 2020a and 2020b) on American democracy prior to the 2020 electoral process. Also noteworthy are the cases of Boris Johnson and Jeremy Corbyn (Future Advocacy, 2019a and 2019b) where they express opinions contrary to their ideologies, or Queen Elizabeth II recording a video for TikTok in an end-of-year message (Channel 4 Comedy, 2021).

A relevant case of disinformation in India is that of President Manoj Tiwari of the Bharatiya Janata Party (BJP) who sent a political election message in various Indian dialects to 15 million citizens, without warning that the video was generated with AI and that he does not speak those dialects (Jee, 2019; Lyons, 2020). These cases highlight the fine line between information and misinformation, despite some of them being warned as deepfakes (Chawla, 2019; Forsdick, 2019).

In 2021, there is a proliferation of discursive genres in marketing, e-commerce, fashion and retail. Such as the Superpersonal App (2021), Amazon Style Snap (2020), Google Lens (2021), which allow customers to try on virtual wardrobes, swap their faces for digital models and shop online. For clothing and fashion retailers it is an opportunity to create or convert their customers into personalised virtual models with little marketing budget (Campbell et al., 2021, Zeynal et al., 2021).

Covid-19 accelerated the adoption of avatars and digital environments in retail and fashion, as in the case of Balmain (Balmain, 2020; Digital, 2020) when presenting the “Zoom collection”, with an avatar of its designer Olivier Rousteing (Socha, 2020). Uses, applications and discursive genres associated with advertising, marketing, fashion such as Echo Look, included in Amazon’s Shopping App or Generated Photos (2021) transform the fashion and tourism industry using avatars, facial mapping, immersive photo-real platforms and AI generation models (Bogicevic et al., 2019; Whittaker et al., 2021; Dietmar, 2019).

Discursive genres related to digital humans and intelligent assistants are also expanding. Intelligent assistants are associated with uses in telepresence, videoconferencing, chatbots and character creation for film fiction. This is the case of digital humans produced by Doug Roble (Roble et al., 2019) using inertial motion capture and deep neural networks (Ted, 2019); and the virtual assistant Google Duplex (Huffman, 2018; Leviathan, 2018).

The first uses of deepfakes for advertisements are also appearing, such as that of Soriana (2021). Cantinflas, a famous film actor who died in 1993, stars in the first deepfake advertisement in Mexico for a supermarket (Ferrer, 2021). This is joined by an avalanche of AI-produced avatars and influencers bursting onto the advertising and social media scene such as Lil Miquela (2021), Colonel Sanders (KFC, 2021; Digital Agency Network, 2020), Mona Haddid (2021), Shudu (2021), Bermunda (2021), Blawko (2021) and Imma (2021) (Guthrie, 2020; Mosley, 2021; Adriani, 2019).

In the field of education, there are applications such as the recreations of Dalí Lives at The Dalí Museum (2019), Digital Einstein (2021) or Dimension in Testimony (University of Southern California’s Shoah Foundation, 2021), educational resources with which one can engage in an interactive conversation and learn more about the life and work of these authors, or of the survivors of the Holocaust.

Deepfake discourses share the characteristics of digital socio-media culture, post-meme culture and snack media culture, composed of short, transmedia, hypermedia, intertextual narratives, shared on social networks and digital platforms such as Facebook, Twitter, WhatsApp, TikTok and Instagram, among others (Scolari, 2020; Bown and Briston, 2019).

A deepfake is likely to influence forms and behaviours of other media species such as memes, gifs, selfies, avatars, rumoured images, animated photomontages, mockumentaries, video montages, short videos, narrative micro-pieces (satires, parodies, alternative endings, fake trailers, etc.), informative and propagandistic micro-formats, posts, trailers, teasers, video clips, lipdubs, webisodes, sneak-peeks, mobisodes, credits, nano-narratives, nano-contents, among other microfiction or documentary microfiction formats (Scolari, 2020; Aldrin, 2005; Cortazar, 2014; Conte, 2019).

4. Discussion

We are progressively witnessing an expansion of semantic fields and discursive genres of deepfake, reconfiguring the scenario that initially appeared in 2017, and that towards 2021 presents new horizons with a marked tendency towards the development of beneficial applications in various economic, cultural and media spheres.

Although criminal uses persist, such as pornography, harassment, extortion, phishing, fake news and disinformation, deepfake production is beginning to experience a trend towards beneficial uses in genres such as political activism, social campaigns, education, health, e-commerce, marketing, advertising, fashion, tourism, entertainment, virtual assistants, video games, and cinematic fiction.

This trend calls for a re-signification of the term deepfake, which until now has been used to catalogue any audiovisual document produced using deep learning techniques, GANs, convolutional neural networks and other AI techniques. It is currently debated whether the term deepfake should be used exclusively for criminal practices, and synthetic media for beneficial uses (Schick, 2020, Barnes and Barraclough, 2020).

The cataloguing shown in figures 1 and 2 takes up the most relevant data from the bibliographic and documentary research as a whole, although it is not the product of a quantitative method, but of a qualitative and interpretative one, which can be understood as a limitation of the study.

Deepfake, as a techno-aesthetic object, is in a stage of resignification in terms of its semantic fields and discursive genres. This process is taking place in a highly dynamic and changing technological and media scenario in which its uses and cultural practices are progressively expanding.

References

Adriani, R. (2019). The Evolution of Fake News and the Abuse of Emerging Technologies. European Journal of Social Sciences, 2(1), 32-38. https://bit.ly/3svE2JE

Ajder, H., Patrini, G., and Cavalli, F. (2020). Automating image abuse: Deepfake bots on Telegram. Sensity. Automating Image Abuse: Deepfake bots on Telegram. Sensityai. https://bit.ly/3poYgTG

Ajder, H., Patrini, G., Cavalli, F. (2019). The State of Deepfakes: Landscape, Threats, and Impact. Deeptrace. https://bit.ly/3suHfJB

Aldrin, P. (2005). Sociologie politique des rumeurs. [Political sociology of rumours.] Presses Universitaires de France (PUF).

Amazon (2020). Stylesnap. https://amzn.to/3tlsUhD

Amorós, M. (2019). Fake News, la verdad de las noticias falsas [Fake News, the truth of fake news.] Editorial Platform.

Ayyub, R. (21 November 2018a). I Was the Victim of a Deepfake Porn Plot Intended to Silence Me. HuffPost UK. https://bit.ly/3C1nmwO

Ayyub, R. (22 May 2018b). Opinion | In India, Journalists Face Slut-Shaming and Rape Threats. The New York Times. https://nyti.ms/3stcjtc

Baethge, C., Goldbeck-Wood, S., & Mertens, S. (2019). SANRA-a scale for the quality assessment of narrative review articles. Research integrity and peer review, 4(1), 1-7. https://doi.org/10.1186/s41073-019-0064-8

Ball, J. (2017). Post-Truth: How Bullshit Conquered the World. Bite Back Publishing.

Balmain [@balmain] (15 June 2020). Balmain [Video]. Instagram. https://bit.ly/3K5T8f4

Bañuelos, J. (2022). Fig. 3. Inventory of articles analysed using the methodology of the SANRA Scale for the Assessment of Narrative Review Articles (Baethge, C., Goldbeck-Wood, S., and Mertens, S., 2019). https://figshare.com/s/07888bce696bfe90939c

Barnes, C. and Barraclough, T. (2019). Perception inception: Preparing for deepfakes and the synthetic media of tomorrow. The Law Foundation.

Barnes, C., and Barraclough, T. (2020). Deepfakes and synthetic media. In Emerging technologies and international security (pp. 206-220). Routledge.

Bermuda [@bermudaisbae]. (28 June 2021). Bermuda [Instagram profile]. Instagram. https://bit.ly/3MbRpGM

bill_posters_uk (7 June 2019). Spectre. [Video]. Instagram. https://bit.ly/3C59QZ9

Blawko [@blawko22]. (28 June 2021). Blawko [Instagram profile]. Instagram. https://bit.ly/3IyF9y1

Bogicevic, V., Seo, S., Kandampully, J. A., Liu, S. Q., & Rudd, N. A. (2019). Virtual reality presence as a preamble of tourism experience: The role of mental imagery. Tourism Management, 74, 55-64. https://doi.org/10.1016/j.tourman.2019.02.009. https://doi.org/10.1016/j.tourman.2019.02.009

Bown, A. and Briston, D. (2019). Post Memes: Seizing the Memes of Production. Punctum books. https://doi.org/10.2307/j.ctv11hptdx

Campbell, C., Plangger, K., Sands S and Kietzmann, J. (2021). Preparing for an era of deepfakes and AI-generated ads: A framework for understanding responses to manipulated advertising. Journal of Advertising. https://doi.org/10.1080/00913367.2021.1909515

CBS (24 January 2020). President’s words used to create “deepfakes” at Davos. [Video]. YouTube. https://bit.ly/3poo9Tq

Cerdán, V., García Guardia, M. L., Padilla, G. (2020). Alfabetización moral digital para la detección de deepfakes y fakes audiovisuales. CIC: Cuadernos de información y comunicación [Digital moral literacy for the detection of deepfakes and audiovisual fakes. CIC: Information and Communication Notebooks], 25, 165-181. http://dx.doi.org/10.5209/ciyc.68762

Codina, Ll. (2018). Revisiones bibliográficas sistematizadas: Procedimientos generales y framework para Ciencias Humanas y Sociales. En C. Lopezosa; J. Díaz-Noci, J.; Ll. Codina, Methodos. Anuario de métodos de investigación en comunicación social. [Systematized literature reviews: General procedures and framework for Human and Social Sciences.] In C. Lopezosa; J. Díaz-Noci, J.; Ll. Codina, Methodos. Yearbook of research methods in social communication. Universitat Pompeu Fabra, (50-60). https://doi.org/10.31009/methodos.2020.i01.05

Cole, S. (27 June 2019). “Esta terrorífica app crea un nude de cualquier mujer con un simple clic.” [This terrifying app creates a nude of any woman with a simple click.] Vice. https://bit.ly/3M9LvpE

Conte, P.J. (2019). Mockumentality: from hyperfaces to deepfakes. World Literature Studies. Insitute of World Literature SAS. https://bit.ly/3K1aTfe

Cosentino, G. (2020). Social Media and the Post-Truth World Order. The Global Dynamics of Disinformation. Springer Nature.

Cooke, N. A. (2018). Fake News and Alternative Facts. Information Literacy in a Post-Truth Era. American Library Association.

Cortazar Rodríguez, F. J. (2014). Imágenes rumorales, memes y selfies: elementos comunes y significados. [Rumor images, memes and selfies: common elements and meanings.] Iztapalapa. Revista de ciencias sociales y humanidades, 35(77), 191-214.

Channel 4 Comedy (15 March 2021). The Queen’s Christmas message gets a deepfake makeover. [Video]. YouTube. https://bit.ly/35H7UtO

Chawla, R. (2019). Deepfakes: How a pervert shook the world. International Journal for Advance Research and Development, 4, 4-8. https://bit.ly/3psxedZ

Chen, M., Radford, A., Child, R., Wu, J., Jun, H., Luan, D., Sutskever, I. (2020). Generative pretraining from pixels. In: Proceedings of the 37th International conference on machine learning, n. 119, 1691-1703. https://bit.ly/3hLcTwp

Ctrl Shift Face (August 6, 2019). Bill Hader channels Tom Cruise [DeepFake]. [Video]. YouTube. https://www.youtube.com/watch?v=VWrhRBb-1Ig

D’Ancona, M. (2018). Post-Truth, the New War on Truth and How to Fight Back. Edbury Press.

Digital, K. (28 December 2020). Balmain Virtual Showroom [Video]. Vimeo. https://vimeo.com/498685433

Google Lens (2021)- Search What You See. (2021, November 19). Google Lens. https://lens.google/

Granda-Orive, J. I., Alonso-Arroyo, A., García-Río, F., Solano-Reina, S., Jiménez-Ruiz, C. A., and Aleixandre-Benavent, R. (2013). Ciertas ventajas de Scopus sobre Web of Science en un análisis bibliométrico sobre tabaquismo. [Certain advantages of Scopus over Web of Science in a bibliometric analysis on smoking.] Revista española de documentación científica, 36(2), e011-e011. https://doi.org/10.3989/redc.2013.2.941

Derpfakes (7 September 2018). Princess Leia Remastered...Again [Video]. YouTube. https://bit.ly/3tmQaMe

Dietmar, J. (2019, May 21). GANs And Deepfakes Could Revolutionize The Fashion Industry. Forbes. https://bit.ly/3pop32g

Digital Agency Network (19 November 2020). Meet KFC’s New Computer-Generated Virtual-Influencer, Colonel. Digital Agency Network. https://bit.ly/3IyHE3n

Ferrer (7 May 2021) Making of Cantinflas. Facebook. https://bit.ly/35j9amY

Forsdick, S. (2019, February 27). Meet the team working to prevent the spread of next-gen fake news through ‘deepfake’ videos. NSBusiness. https://bit.ly/3vuivmO

Fraga-Lamas, P., and Fernández-Caramés, T. M. (2020). Fake news, disinformation, and deepfakes: Leveraging distributed ledger technologies and blockchain to combat digital deception and counterfeit reality. IT Professional, 22(2), 53-59. https://doi.org/10.1109/MITP.2020.2977589

Future Advocacy (26 November 2019a). Boris Johnson has a message for you. (deepfake). [Video]. YouTube. https://bit.ly/3swIbNt

Future Advocacy (26 November 2019b). Jeremy Corbyn has a message for you. (deepfake). [Video]. YouTube. https://bit.ly/3MdPNMH

Gabon 24 (31 December 2018). Discours à la nation du président Ali Bongo Ondimba. [Speech to the nation by President Ali Bongo Ondimba] [Video]. Facebook. https://bit.ly/3K7LZL0

Garimella, K. and Eckles, D. (2020). Images and misinformation in political groups: Evidence from WhatsApp in India. https://doi.org/10.37016/mr-2020-030

Generated Photos (28 June 2021). Gallery of AI Generated Faces | Generated.photos. (n.d.). https://generated.photos/faces

Gómez-de-Ágreda, Á., Feijóo, C., and Salazar-García, I. A. (2021). Una nueva taxonomía del uso de la imagen en la conformación interesada del relato digital. Deep fakes e inteligencia artificial. [A new taxonomy of image use in the self-interested shaping of digital storytelling. Deep fakes and artificial intelligence.] Profesional de la Información, 30(2). https://doi.org/10.3145/epi.2021.mar.16

Gosse, Ch. and Burkell, J. (2020). Politics and porn: how news media characterizes problems presented by deepfakes. Critical Studies in Media Communication. FIMS Publications. 345. https://ir.lib.uwo.ca/fimspub/345

Greene, T. (2021, September 30). Who thought political ads featuring Deepfake Putin and Kim trashing the US was a good idea? Tnw. https://bit.ly/3Mbdrtb

Guthrie, S. (2020). Virtual influencers: More human than humans. In Influencer Marketing (pp. 271-285). Routledge. https://bit.ly/3HzglVg

Güera, D. and Delp, E. J. (2018). Deepfake video detection using recurrent neural networks. In 2018 15th IEEE international conference on advanced video and signal based surveillance (AVSS) (pp. 1-6). IEEE. https://doi.org/10.1109/AVSS.2018.8639163

Hao, K. (3 September 2020). Viral ‘deepfakes’: the fine line between humour and abuse. MIT Technology Review. https://bit.ly/3pt6oCu

Hao, K. (20 October 2020). A deepfake bot is being used to “undress” underage girls. MIT Technology Review. https://bit.ly/3ps0ql8

Huffman, S. (8 May 2018). The future of the Google Assistant: Helping you get things done to give you time back. The Keyword. https://bit.ly/3pszySf

Ibañez, J. (2017). En la era de la posverdad. [In the age of post-truth.] Calambur.

Imma [@imma.gram]. (28 June 2021). Imma.gram [Instagram profile]. Instagram. https://bit.ly/344cWzX

Initiative (2020). Culture Shock. True, Lies and Technology. https://bit.ly/35HaeRy

Ipsen, G. (1924). Der alte Orient und die Indogermanen. Festschrif - W. Streitberg. Heidelberg, 200-237.

Jee, Ch. (2019). An Indian politician is using deepfake technology to win new voters. MIT Technology Review. https://bit.ly/3IBsdat

Kalpokas, I. (2019). A Political Theory of Post-Truth. Palgrave Macmillan.

KFC (23 July 2021). KFC/WTF! Deep Fake Colonel Sanders. [Video]. YouTube. https://bit.ly/35gc7ou

Kietzmann, J., Lee, L. W., McCarthy, I. P., Kietzmann, T. C. (2020). Deepfakes: Trick or treat? Business Horizons, 63(2), 135-146. https://doi.org/10.1016/j.bushor.2019.11.006

Kwok, A. O., and Koh, S. G. (2020). Deepfake: a social construction of technology perspective. Current Issues in Tourism, 24(13), 1798-1802. https://doi.org/10.1080/13683500.2020.1738357

Hyeongwoo, K. et. al. (2019). Neural style-Preserving visual dubbing. SIGGRAPH Asia 2019. https://bit.ly/3tjRdwH

Leviathan, Y. (2018, May 08). Google Duplex: An AI System for Accomplishing Real-World Tasks Over the Phone. Google AI Blog. https://bit.ly/3vBLZyO

Lil Miquela (2021). [@lilmiquela]. LilMiquela [Instagram profile]. Instagram. Retrieved June 28, 2021. https://bit.ly/3vuQ4Vt

Lyons, K. (2020, Feb 18). An Indian politician used AI to translate his speech into other languages to reach more voters. The Verge. https://bit.ly/35lz1L1

Malaria Most Die (2019). David Beckham speaks nine languages to launch Malaria Must Die Voice Petition. [Video]. YouTube. https://bit.ly/343XqUT

Malaria Most Die (2021). A World Without Malaria. https://bit.ly/3sthARw

Maras, M. H. and Alexandrou, A. (2018). Determining authenticity of video evidence in the age of artificial intelligence and in the wake of Deepfake videos. The International Journal of Evidence and Proof, 23(3), 255-262. https://doi.org/10.1177/1365712718807226

Martínez, M. (2003). Definiciones del concepto campo en Semántica: antes y después de la lexemática de E. Coseriu. [Definitions of the concept of field in Semantics: before and after E. Coseriu's lexematics.] Odisea No. 3. Universidad Complutense de Madrid. http://repositorio.ual.es/handle/10835/1380

Martínez, V. C. and Castillo, G. P. (2019). Historia del" fake" audiovisual:" deepfake" y la mujer en un imaginario falsificado y perverso. [History of the audiovisual “fake”: “deepfake” and women in a falsified and perverse imaginary.] Historia y Comunicación Social, 24(2), 55. https://doi.org/10.5209/hics.66293

Mechanical (July 26, 2020). Obama sings baka mitai. [Video]. YouTube. https://bit.ly/3suHg08

Meersohn, C. (2021). Introduction to Teun Van Dijk: Discourse Analysis. Moebius Tape. Faculty of Social Sciences, University of Chile.

Moe Ment (6 August 2020). Avengers: Dame Da Ne (Baka Mitai) [Video]. YouTube. https://bit.ly/3IAzuaH

Mona Haddid [@monna_haddid]. (28 June 2021) Mona Haddid[Instagram profile]. Instagram. https://bit.ly/35ZDxM4

Mosley, M. (2021). Virtual Influencers: What Are They & How Do They Work? Influencer Matchmaker. https://bit.ly/3hsMiDV

Naruniec, J., Helminger, L., Schroers, C., and Weber, R. M. (2020). High-resolution neural face swapping for visual effects. In Computer Graphics Forum (Vol. 39, No. 4, pp. 173-184). https://onlinelibrary.wiley.com/doi/abs/10.1111/cgf.14062

Nield, D. (2020). Samsung’s Creepy New AI Can Generate Talking Deepfakes From a Single Image. Science Alert. https://bit.ly/3hvhyCg

Nguyen, T. T., Nguyen, C. M., Nguyen, D. T., Nguyen, D. T., and Nahavandi, S. (2019). Deep learning for deepfakes creation and detection: A survey. Cronell University. http://arxiv.org/abs/1909.11573

Oliveira-Teixeira, F., Donadon-Homem, T. P., and Pereira-Junior, A. (2021). ). Aplicación de inteligencia artificial para monitorear el uso de mascarillas de protección. [Application of artificial intelligence to monitor the use of protective masks.] Revista Científica General José María Córdova, 19(33), 205-222. https://doi.org/10.21830/19006586.725

Paris, B. and Donovan, J. (2019). Deepfakes and Cheap Fakes. United States of America: Data and Society. https://bit.ly/3pP6VPx

Parkinson, H. (8 October 2016). Presidential Avengers: Uncivil War. [Video]. YouTube. https://bit.ly/3ps0Msg

Piasecki, J., Waligora, M., & Dranseika, V. (2018). Google search as an additional source in systematic reviews. Science and engineering ethics, 24(2), 809-810. https://doi.org/10.1007/s11948-017-0010-4

Pixellot.tv. T. (22 December 2021). AI-Automated Sports Camera, Streaming & Analytics. https://bit.ly/3IpL9IZ

Propuesta Cívica. (29 October 2020). Iniciativa #SeguimosHablando exige justicia para periodistas asesinados y desaparecidos en México. [The #SeguimosHablando initiative demands justice for murdered and disappeared journalists in Mexico.] [Video]. YouTube. https://bit.ly/3K4y74n

RepresentUs (29 September 2020a). Dictators - Vladimir Putin. [Video]. YouTube. https://bit.ly/3HA2Vs1

RepresentUs (29 September 2020b). Dictators - Kim Jong-Un. [Video]. YouTube. https://bit.ly/3trzZ0u

Roble, D., Hendler, D., Buttell, J., Cell, M., Briggs, J., Reddick, C. and Chien, C. (2019). Real-time, single camera, digital human development. ACM SIGGRAPH 2019 Real-Time Live! (pp. 1-1). https://doi.org/10.1145/3306305.3332366

Rodríguez Jiménez, A., & Pérez Jacinto, A. O. (2017). Métodos científicos de indagación y de construcción del conocimiento. [Scientific methods of enquiry and knowledge construction.] Revista Escuela De Administración De Negocios, (82), 175-195.

Scolari, C. (2020). Cultura snack. [Snack culture.] La Marca.

Schick, N. (2020). Deepfakes: The Coming Infocalypse. Hachette.

Simondon, G. (2013). Imaginación e invención. [Imagination and invention.] Editorial Cactus.

Socha, M. (2020). Exclusive: Olivier Rousteing’s Avatar Greets Buyers at Balmain’s Virtual Showroom. WWD. https://bit.ly/3svTwxi

Sohrawardi, S. J., Chintha, A., Thai, B., Seng, S., Hickerson, A., Ptucha, R., and Wright, M. (November, 2019). Poster: Towards robust open-world detection of deepfakes. In Proceedings of the 2019 ACM SIGSAC Conference on Computer and Communications Security (pp. 2613-2615). https://doi.org/10.1145/3319535.3363269

Shamook (19 August 2020). De-aging Robert Deniro in The Irishman [DeepFake]. (2020, August 19). [Video]. YouTube. https://www.youtube.com/watch?v=dHSTWepkp_M

Shudu [@shadu.gram]. (28 June 2021). Shadu [Instagram profile]. Instagram. https://bit.ly/35e1vqc

Silverman, C. (April 17, 2018). How to Spot a Deepfake Like the Barack Obama-Jordan Peele Video. BuzzFeed. https://bzfd.it/3tkHzd1

Soriana (2021). Cantinflas. (May 1, 2021). [Video]. YouTube. https://bit.ly/342gP8u

Sotheby’s (2019). Artificial Intelligence and the Art of Mario Klingemann. Sotheby’s https://bit.ly/3HCg6sq

Superpersonal - Personalised Styling and Virtual Fitting Room. (September 9, 2021). Product Hunt. https://bit.ly/3stuXkG

Suwajanakorn, S., Seitz, S. M. and Kemelmacher-Shlizerman, I. (2017a). Synthesizing obama: learning lip sync from audio. ACM Transactions on Graphics (ToG), 36(4), 1-13. https://bit.ly/3C1QBzL

Suwajanakorn, S., Seitz, S. M., and Kemelmacher-Shlizerman, I. (July 12, 2017b). Synthesizing Obama: Learning Lip Sync from Audio. [Video]. YouTube. https://bit.ly/3C1QBzL

Ted (May 28, 2019). Digital Humans that Look Just Like Us | Doug Roble. [Video]. YouTube. https://bit.ly/3HvQehJ

Temir, E . (2020). Deepfake: New Era in The Age of Disinformation and End of Reliable Journalism. Selçuk İletişim, 13 (2), 1009-1024. http://dx.doi.org/10.18094/JOSC.685338

The Dalí Museum. Behind the Scenes: Dalí Lives. (8 May 2019). [Video]. YouTube. https://bit.ly/35oHvAZ

The Zizi Show (2020) - A Deepfake Drag Cabaret. (n.d.). The Zizi Show. https://zizi.ai/

University of Southern California’s Shoah Foundation (2021). Dimension of Testimony. https://sfi.usc.edu/dit

Unreal Engine (2021). Unreal Engine The most powerful real-time 3D creation tool. (2021, November 25). https://www.unrealengine.com/en-US/

Vaccari, C. and Chadwick, A. Deepfakes and Disinformation: Exploring the Impact of Synthetic Political Video on Deception, Uncertainty, and Trust in News. Social Media + Society. January-March 2020: 1-13.

Vincent, J. (2021, May 18). Deepfake dubs could help translate film and TV without losing an actor’s original performance. The Verge. https://bit.ly/3IxFkcP

Westerlund, M. (2019). The Emergence of Deepfake Technology: A Review. Technology Innovation Management Review. https://timreview.ca/article/1282

Whittaker, L., Letheren, K. and Mulcahy, R. (2021). The Rise of Deepfakes: A Conceptual Framework and Research Agenda for Marketing. Australasian Marketing Journal, 29(3), 204-214. https://doi.org/10.1177/1839334921999479

Zakharov, E., Shysheya, A., Burkov, E., & Lempitsky, V. (2019). Few-shot adversarial learning of realistic neural talking head models. In Proceedings of the IEEE/CVF International Conference on Computer Vision (pp. 9459-9468), https://arxiv.org/abs/1905.08233.

Xu, B., Liu, J., Liang, J., Lu, W. and Zhang, Y. (2021). DeepFake Videos Detection Based on Texture Features. Computers, Materials and Continua, 68(1), 1375-1388. https://doi.org/10.32604/cmc.2021.016760

Zeynal, O., Malekzadeh, S. (2021). Virtual Dress Swap Using Landmark Detection. arXiv.org. https://arxiv.org/abs/2103.09475

Notes

1

Publications on deepfake are steadily increasing, both informal, journalistic and scientific. Google Search returns 19,200,000 results (1 May 2021); in 2020 there were 1301 academic publications on GANs in arXiv (Cornel University), and by May 2021 there are already 4092 mathematical articles on the subject. In Web of Science, the search for deepfake yielded 81 publications (1 May 2021) of which 65 were in Computer Science and Engineering, 11 in Communication, 5 in Law, 2 in Film, Radio, Television, 2 in Political Science, 2 in Ethics. Socpus lists 603 articles on deepfake between 2018 and 2021, of which 447 are related to the field of Computer Science, 224 to Engineering, 166 to Social Sciences, 74 to Mathematics, 68 to Decision Sciences, 39 to Arts and Humanities, 36 to Scientific Material, 31 to Physics and Astronomy, 29 to Psychology, 24 to Business, Management and Accounting. As can be seen, the field of deepfake research is much more developed in the area of Computer Science and Engineering than in the Social Sciences. Google Scholar returned 4,270 results for “deepfake”, 639 for “deepfake” and “discourse” and 141 for “deepfake” and “discourse”. In Taylor and Francis Journals there are 80 scientific articles with the search “deepfake”, among the most numerous we find 30 in Humanities, 26 in Law, 23 in Social Sciences, 9 in Communication, where the central themes are Disinformation, Pornography, Politics, Advertising, Security. For the search “deepfake” and “dicourse”, we found 35 articles mainly dealing with these same semantic fields. The profile of each journal undoubtedly determines the type of speciality to which the published articles relate.

Additional information

To cite this article

:

Bañuelos, J. (2022). Evolution of Deepfake: semantic fields and discursive genres (2017-2021). Revista ICONO 14. Revista Científica de Comunicación y Tecnologías Emergentes, 20(1). https://doi.org/10.7195/ri14.v20i1.1773

ISSN: 1697-8293

Vol. 20

Num. 1

Año. 2022

Deepfake evolution: Semantic Fields and Discursive Genres (2017-2021)

Jacob Bañuelos Capistrán 1